Examples#

This section contains some complete examples that demonstrate the main features of requests-cache.

Articles#

Some articles and blog posts that discuss requests-cache:

PyBites: Module of the Week: requests-cache for repeated API calls

Real Python: Caching External API Requests

Thomas Gorham: Faster Backtesting with requests-cache

Tim O’Hearn: Pragmatic Usage of requests-cache

Valdir Stumm Jr: Tips for boosting your Python scripts

Python Web Scraping (2nd Edition): Exploring requests-cache

Cui Qingcai: 一个神器,大幅提升爬取效率 (A package that greatly improves crawling efficiency)

Scripts#

The following scripts can also be found in the examples/ folder on GitHub.

Basic usage (with sessions)#

A simple example using requests-cache with httpbin

Basic usage (with patching)#

The same as basic_sessions.py, but using Patching

Cache expiration#

An example of setting expiration for individual requests

URL patterns#

An example of Expiration With URL Patterns

PyGithub#

An example of caching GitHub API requests with PyGithub.

This example demonstrates the following features:

Patching: PyGithub uses

requests, but the session it uses is not easily accessible. In this case, usinginstall_cache()is the easiest approach.URL Patterns: Since we’re using patching, this example adds an optional safety measure to avoid unintentionally caching any non-Github requests elsewhere in your code.

Cache-Control: The GitHub API provides

Cache-Controlheaders, so we can use those to set expiration.Conditional Requests: The GitHub API also supports conditional requests. Even after responses expire, we can still make use of the cache until the remote content actually changes.

Rate limiting: The GitHub API is rate-limited at 5000 requests per hour if authenticated, or only 60 requests per hour otherwise. This makes caching especially useful, because cache hits and

304 Not Modifiedresponses (from conditional requests) are not counted against the rate limit.Cache Inspection: After calling some PyGithub functions, we can take a look at the cache contents to see the actual API requests that were sent.

Security: If you use a personal access token, it will be sent to the GitHub API via the

Authorizationheader. This is not something you want to store in the cache if your storage backend is unsecured, soAuthorizationand other common auth headers/params are redacted by default. This example shows how to verify this.

Multi-threaded requests#

An example of making multi-threaded cached requests, adapted from the python docs for

ThreadPoolExecutor.

Logging requests#

An example of testing the cache to prove that it’s not making more requests than expected.

External configuration#

An example of loading CachedSession settings from an external config file.

Limitations:

Does not include backend or serializer settings

Does not include settings specified as python expressions, for example

timedeltaobjects or callback functions

Cache speed test#

An example of benchmarking cache write speeds with semi-randomized response content

Usage (optionally for a specific backend and/or serializer):

python benchmark.py -b <backend> -s <serializer>

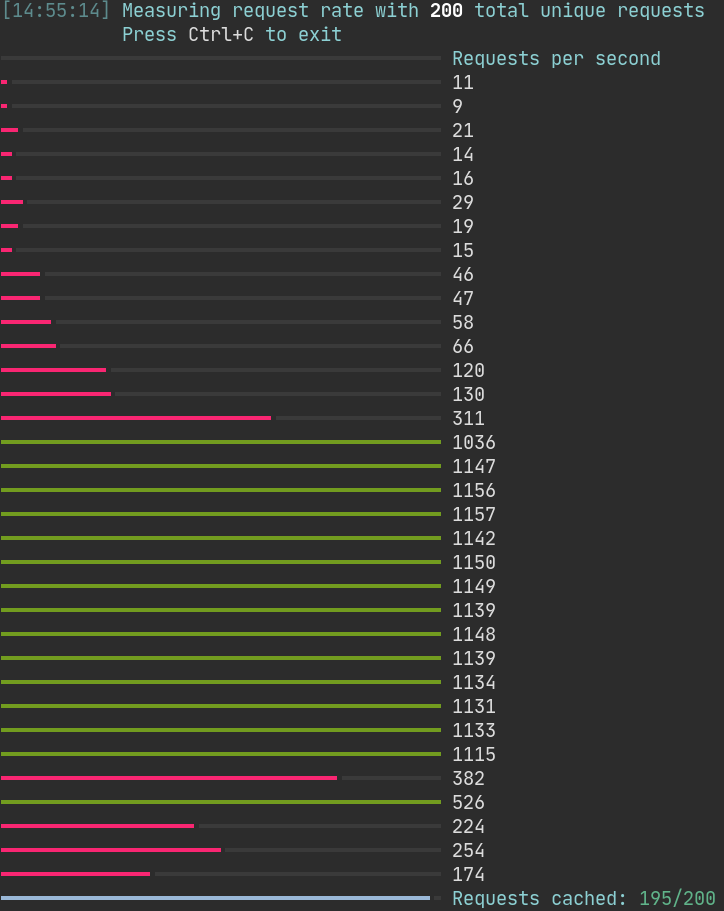

Requests per second graph#

This example displays a graph of request rates over time. Requests are continuously sent to URLs randomly picked from a fixed number of possible URLs. This demonstrates how average request rate increases as the proportion of cached requests increases.

Try running this example with different cache settings and URLs to see how the graph changes.

Using with GitHub Actions#

This example shows how to use requests-cache with GitHub Actions. Key points:

Create the cache file within the CI project directory

You can use actions/cache to persist the cache file across workflow runs

You can use a constant cache key within this action to let requests-cache handle expiration

Converting an old cache#

Example of converting data cached in older versions of requests-cache (<=0.5.2) into the current format

Custom request matcher#

Example of a custom request matcher that caches a new response if the version of requests-cache, requests, or urllib3 changes.

This generally isn’t needed, since anything that causes a deserialization error will simply result in a new request being sent and cached. But you might want to include a library version in your cache key if, for example, you suspect a change in the library does not cause errors but results in different response content.

This uses info from requests.help.info(). You can also preview this info from the command

line to see what else is available:

python -m requests.help

Backtesting with time-machine#

An example of using the time-machine library for backtesting, e.g., testing with cached responses that were available at an arbitrary time in the past.

VCR Export#

Example utilities to export responses to a format compatible with VCR-based libraries, including: